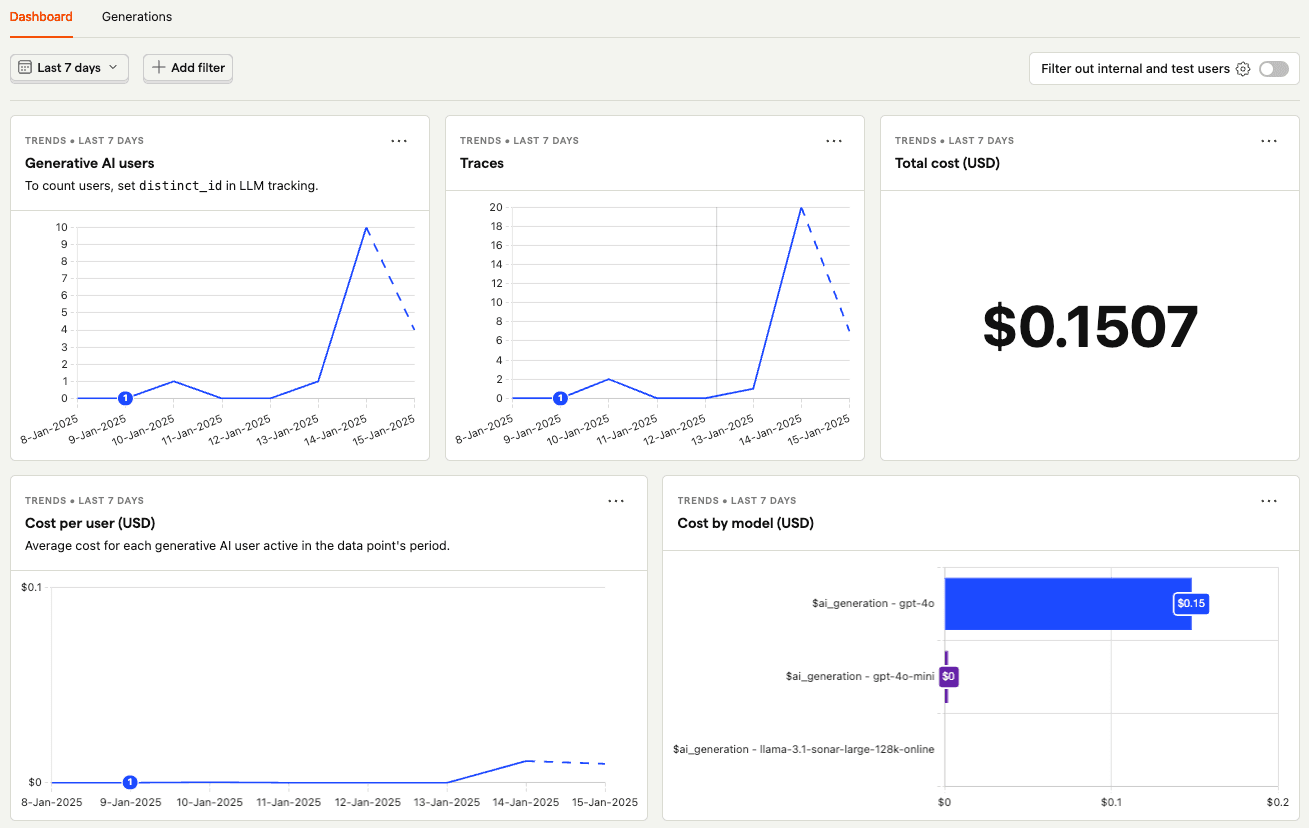

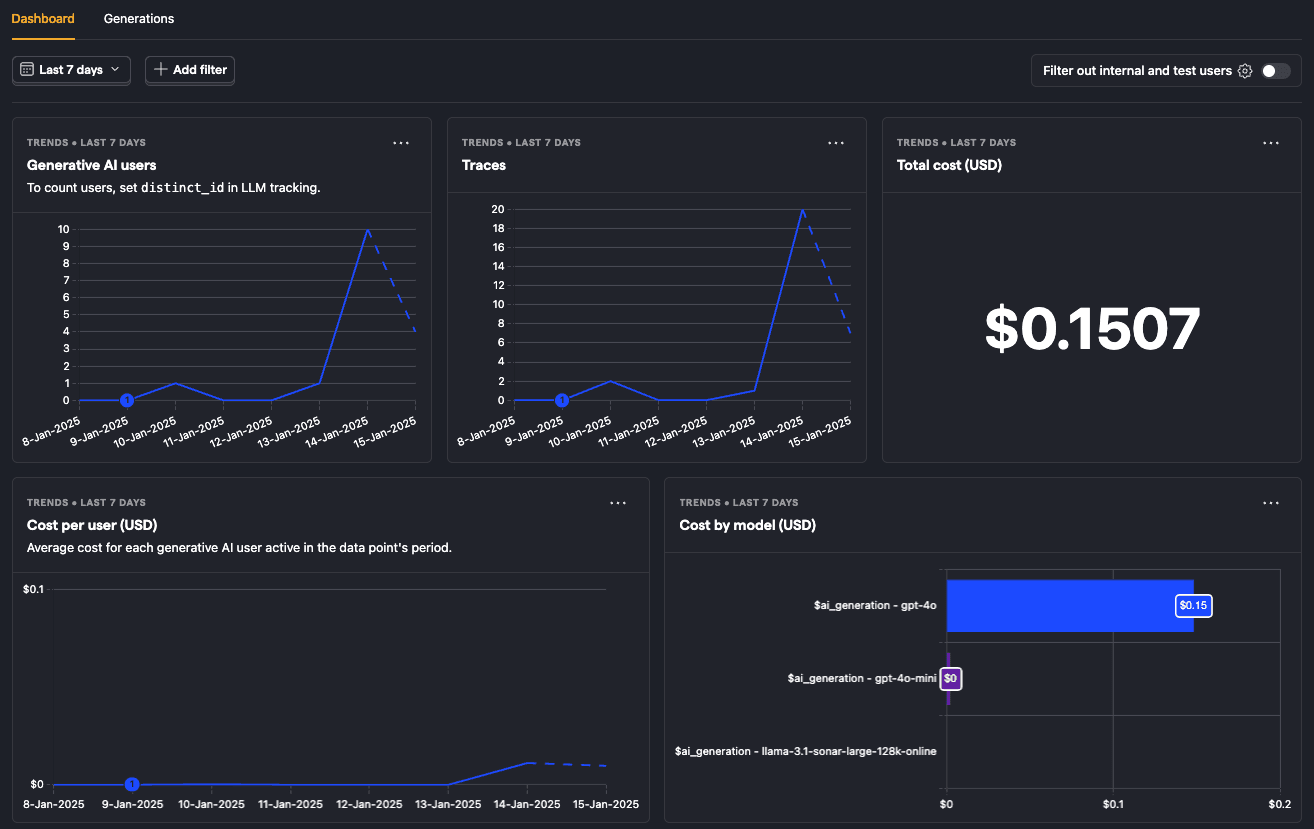

The LLM observability dashboard provides an overview of your LLM usage and performance. It includes insights on:

- Users

- Traces

- Costs

- Generations

- Latency

It can be filtered like any dashboard in PostHog, including by event, person, and group properties. Our observability SDKs autocapture especially useful properties like provider, tokens, cost, model, and more.

This dashboard is a great starting point for understanding your LLM usage and performance. You can use it to answer questions like:

- Are users using our LLM-powered features?

- What are my LLM costs by customer, model, and in total?

- Are generations erroring?

- How many of my users are interacting with my LLM features?

- Are there generation latency spikes?

To dive into specific generation events, click on the generations or traces tabs to get a list of each captured by PostHog.